Salesforce Einstein Studio Delivers on BYOM

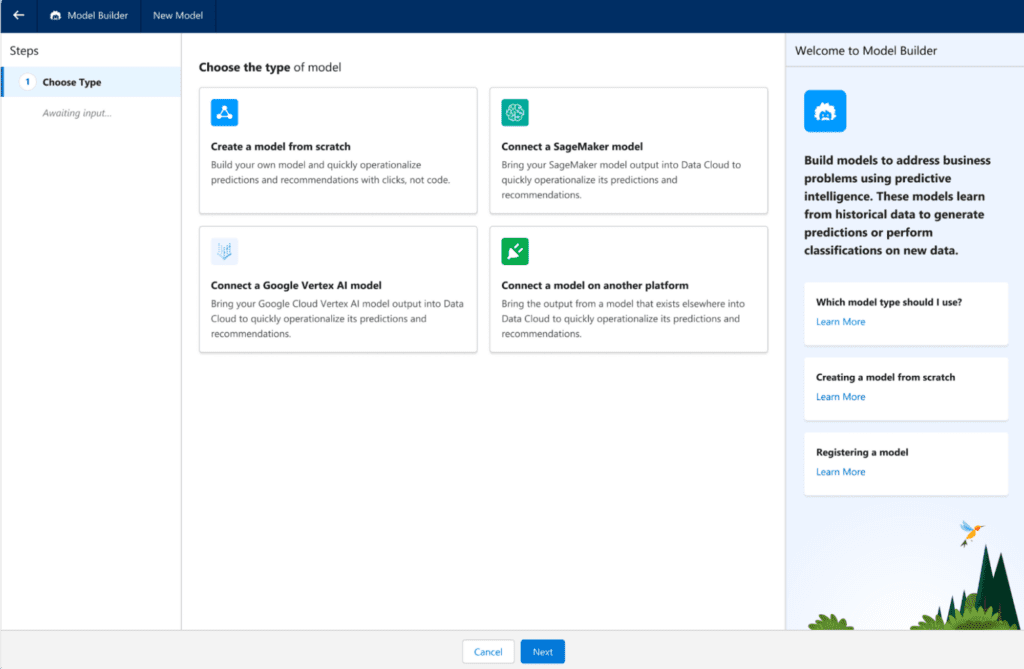

Salesforce announced the general availability of Einstein Studio last week. It is a new bring-your-own-model (BYOM) solution that provides connectivity between Salesforce Data Cloud and external AI platforms like Amazon SageMaker and Google Vertex AI. In this post, I will introduce Einstein Studio and present quick overviews of AWS SageMaker and Google Vertex AI.

Table of contents

Introducing Einstein Studio

Einstein Studio now connects Data Cloud, Salesforce’s real-time customer data platform, to SageMaker and Vertex AI for building custom AI models. “Einstein Studio makes it faster and easier to run and deploy enterprise-ready AI across every part of the business, bringing trusted, open, and real-time AI experiences to every application and workflow,” said Rahul Auradkar, Salesforce EVP & GM, Unified Data Services & Einstein in an interview.

The number of Salesforce customers who will be able to use Einstein Studio will be limited. Only those existing Data Cloud customers who have, or plan on having, model training projects will be able to use Einstein Studio.

Direct System Integration

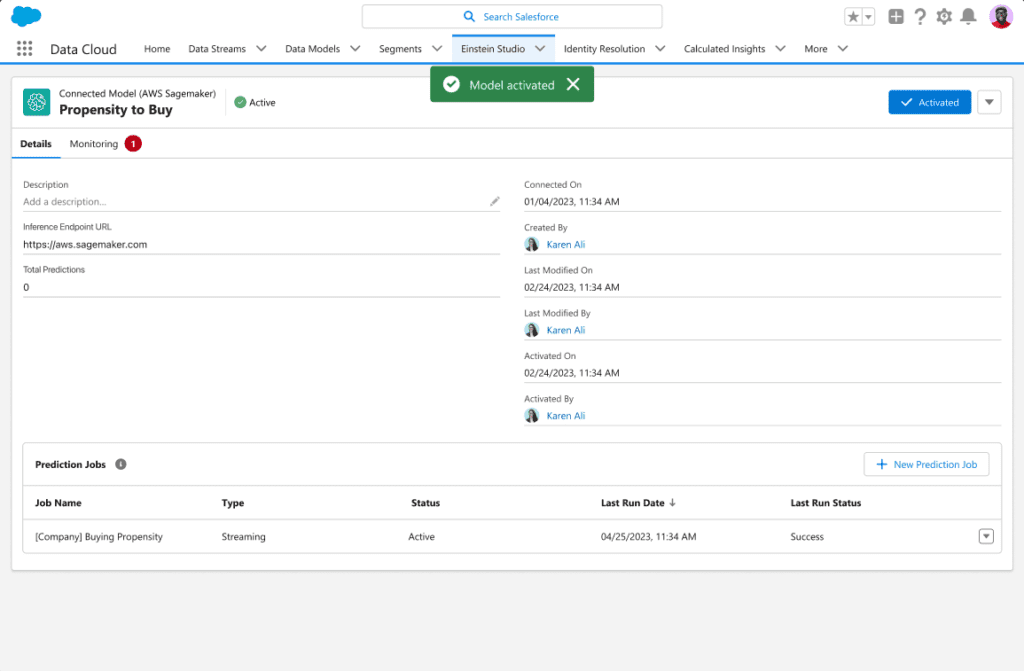

With Einstein Studio companies can now securely expose their proprietary CRM data from Data Cloud to train custom models on SageMaker or Vertex AI without complex ETL processes. “Our point was we’ll meet the customer where they want to be met, which is where they want to build their models, and then they bring their predictions back in and then we support that through data cloud,” continue Mr. Auradkar in our interview.

Real-Time Data Connectivity

This integration addresses a common roadblock – access to quality, up-to-date data for model training. To do this, Einstein Studio directly pipelines live Salesforce customer data into SageMaker and Vertex AI.

Rather than moving data between systems, the zero-ETL framework allows teams to instantly leverage real-time CRM data to train models tailored to their business. Customers also gain governance controls around data access.

What is Amazon SageMaker?

Amazon SageMaker is a fully managed service from AWS that provides developers and data scientists with tools to quickly build, train, and deploy machine learning models at an enterprise scale. It supports popular frameworks like TensorFlow, PyTorch, and scikit-learn. It also includes purpose-built features for data preparation, model training, tuning, deployment, monitoring, and governance.

The target audience for SageMaker consists of data scientists and ML engineers who want to leverage AWS infrastructure and services to build custom models tailored to their business needs.

What is Google Vertex AI?

Google Vertex AI is an end-to-end managed ML platform that offers an integrated toolchain for building, deploying, and managing ML workflows. It natively connects datasets from BigQuery and provides MLOps capabilities like monitoring, explainability, and governance.

Vertex AI gives access to Google’s pre-trained models and AI research via services like Model Garden and Generative AI Studio. The target user for Vertex AI is data scientists, ML researchers, and engineers who want to tap into Google’s AI expertise and cloud infrastructure.

Comparing SageMaker and Vertex AI

As leading cloud AI platforms, Amazon SageMaker and Google Vertex AI have similarities but also some key differences in their approach.

Access to Models

Both SageMaker and Vertex AI allow access to a catalog of pre-trained foundation models that can be used directly or customized:

- SageMaker provides access to models via Amazon Bedrock, a managed service for deploying foundation models through an API. Bedrock includes models from AI startups like Anthropic, Cohere, and Stability AI.

- Vertex AI offers its Model Garden containing Google’s own models like PaLM and Jurassic-2, as well as partner models. Developers can access models through APIs.

Model Building

For training custom models, SageMaker and Vertex AI offer managed environments with optimized infrastructure:

- SageMaker includes features like, automatic hyperparameter tuning, distributed training, profiling, and debugging, to accelerate model development.

- Vertex AI provides Vertex AI Workbench as an integrated notebook environment for building, training, and deploying models using Google Cloud’s AI services and TensorFlow Extended (TFX).

MLOps Capabilities

Both platforms provide the capability to operationalize models, including monitoring, explainability, and governance:

- SageMaker includes tools for model monitoring, drift detection, bias detection, access controls, and lineage tracking.

- Vertex AI offers an end-to-end MLOps toolkit including Vertex Pipelines, Vertex Feature Store, Vertex Experiments, and Vertex AI Vizier.

Infrastructure and Integration

As fully managed services, both cloud services tightly integrate with their cloud provider’s data and AI services:

- SageMaker natively connects to AWS data services like S3, Redshift, and DynamoDB.

- Vertex AI integrates natively with BigQuery, Dataproc, and other Google Cloud data services.

While the two platforms have significant overlap, their strengths derive from the unique infrastructure and services within AWS and Google Cloud. You should evaluate them based on their existing cloud ecosystem.

Salesforce AI Cloud Keeps Pace

In a crowded AI market, Salesforce is betting that its customer data heritage via Data Cloud will prove a competitive edge. With data issues stalling many AI initiatives, Einstein Studio offers a path to unifying enterprise data with the latest cloud AI innovations.

It is nice to see Salesforce keeping up its promises with AI Cloud. Just two weeks ago, Salesforce announced that parts of Sales GPT and Service GPT had moved into General Availability. Now, with the delivery of Einstein Studio, Salesforce has kept its BYOM promise, keeping up its pace of AI rollouts.