SDLC for Prompts: The Next Evolution in Enterprise AI Development

In the rapidly evolving landscape of enterprise artificial intelligence (AI), the emergence of Large Language Models (LLMs) like GPT-4 and Claude 2 have shifted the paradigm of application development. As businesses strive to harness the vast potential of these models, an innovative concept is taking center stage: the Software Development Life Cycle (SDLC) for prompts. SDLC for Prompts represents a strategic approach to developing, deploying, and maintaining prompts used in AI applications, ensuring their effective alignment with the specific needs of the organization.

In this post I will take you through the evolution of the SDLC in software development. Then I’ll take you through each step in the SDLC for prompts.

Table of contents

The Evolution of the SDLC

Historically, the SDLC has been the cornerstone of software development practices. From building cloud-native apps that run on Kubernetes to developing critical enterprise apps on SaaS platforms like Salesforce, the SDLC has provided a structured framework guiding each phase of development. It handles essential development activities like making an initial requirement analysis to design, coding, testing, deployment, and maintenance.

However, with the advent of AI and machine learning, the traditional SDLC has had to evolve. The process of training an LLM or developing AI applications like the Prompt Engineering Platform (PEP) and Executive Thinking Partner (ETP) necessitates a new kind of SDLC. This evolution reflects the need for a more flexible, iterative process that allows for continuous learning and improvement. The SDLC for prompts represents a shift from rigid, linear stages to a more cyclical model.

The Novelty of an SDLC for Prompts

In this context, the idea of an SDLC for prompts emerges as a novel concept. Instead of treating prompts as mere inputs for AI models, this novel software development philosophy recognizes prompts as critical components that require careful design, testing, and refinement. This approach is particularly vital in an enterprise setting, where prompts need to be tailored to the specific needs and objectives of the organization.

The SDLC for prompts introduces a level of uniformity and scalability previously unseen in AI application development. By systematically managing the lifecycle of prompts, enterprises can ensure that they are leveraging their AI tools effectively and consistently, leading to improved outcomes.

The Importance of an SDLC for Prompts

An SDLC for prompts enhances the effectiveness of LLMs by ensuring that prompts are well-crafted, contextually relevant, and aligned with the task at hand. This is not a trivial concern. The quality and design of prompts can significantly impact the performance of an AI model. Poorly designed prompts can lead to ambiguous or incorrect outputs, while well-crafted prompts can guide the model to produce more accurate and useful results.

Furthermore, an SDLC for prompts allows for the continuous refinement of prompts based on feedback and performance metrics. Just as software applications need to be updated and maintained over time, prompts also need to be iteratively improved and adapted to changing requirements and conditions.

SDLC For Prompts Explained

The need for an SDLC for prompts arises when one considers a prompt to be a functional unit within an enterprise architecture. Especially when parameterized, well-designed prompts become invocable processes which execute functions with variable inputs. In this way, prompts become process artifacts that require careful management like source code.

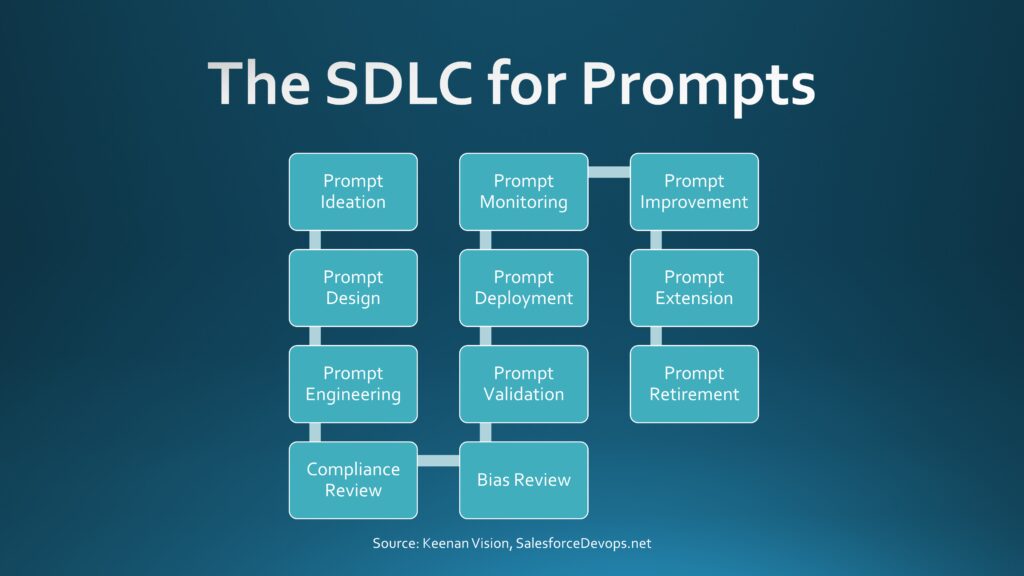

Prompt Ideation

The first stage of the SDLC for prompts involves identifying a workflow or value stream that would benefit from a prompt. This involves understanding the existing process, identifying opportunities for improvement, and defining the goals and success metrics for the prompt. This stage sets the direction for the prompt development process.

Prompt Design

Once the need for a prompt is identified, the next step is to design the prompt. This includes detailing the prompt objective, parameters, data needs, and expected outputs. The design stage lays the groundwork for the prompt and ensures it is aligned with the identified goals and metrics.

Prompt Engineering

The engineering phase involves iterating on the prompt development using AI techniques like Natural Language Processing (NLP). This iterative process helps refine the prompt and ensure it accurately captures the requirements defined in the design phase.

Compliance Review

Before deploying the prompt, it’s critical to review it for compliance with privacy and security policies. This review ensures the prompt adheres to all relevant regulations and does not compromise the privacy or security of the data it will interact with.

Bias Review

The bias review phase involves analyzing the prompt for any potential biases and making necessary adjustments. This review is critical to ensure fairness and accuracy in the outputs of the AI model.

Prompt Validation

The validation phase involves thorough testing of the prompt with representative data and users. This step ensures the prompt works as expected and meets the goals and metrics defined in the ideation stage.

Prompt Deployment

Once validated, the prompt is cataloged and integrated into the target systems. This step brings the prompt into operation, where it can start delivering value.

Prompt Monitoring

After deployment, the prompt is continuously monitored to track usage, performance against goals, errors, and drift. This monitoring helps ensure the prompt continues to perform optimally and meet its objectives.

Prompt Improvement

Based on the metrics and feedback gathered during the monitoring phase, the prompt is refined and enhanced. This continuous improvement process helps ensure the prompt remains effective and relevant over time.

Prompt Extension

The extension phase involves identifying additional applications for the prompt to extend its value. This might involve adapting the prompt for use in different workflows or expanding its capabilities to address new requirements.

Prompt Retirement

Finally, when a prompt becomes obsolete or is superseded by a more effective solution, it is retired. This process involves decommissioning the prompt and ensuring any dependencies are appropriately managed. This ensures that the prompt ecosystem remains up-to-date and effective.

Implementing an SDLC for Prompts

Implementing an SDLC for prompts requires the involvement and commitment of IT leaders and stakeholders across the organization. It involves integrating the SDLC for prompts within the broader AI development process and aligning it with the strategic objectives of the organization.

IT leaders have a critical role to play in supporting SDLC for prompts. They need to advocate for the importance of prompt design and management, allocate resources for prompt development and refinement, and ensure that the benefits of this approach are communicated across the organization.

The Industry Needs an SDLC for Prompts

SDLC for Prompts represents the next step in the evolution of AI application development. As enterprises strive to leverage the power of LLMs, the need for a structured approach to prompt development becomes increasingly apparent. By adopting an SDLC for prompts, enterprises can ensure they are harnessing the full potential of their AI tools, leading to improved outcomes and a stronger competitive edge in the AI-driven future.