Beyond Coding: The New Era of AI Copilot Creation

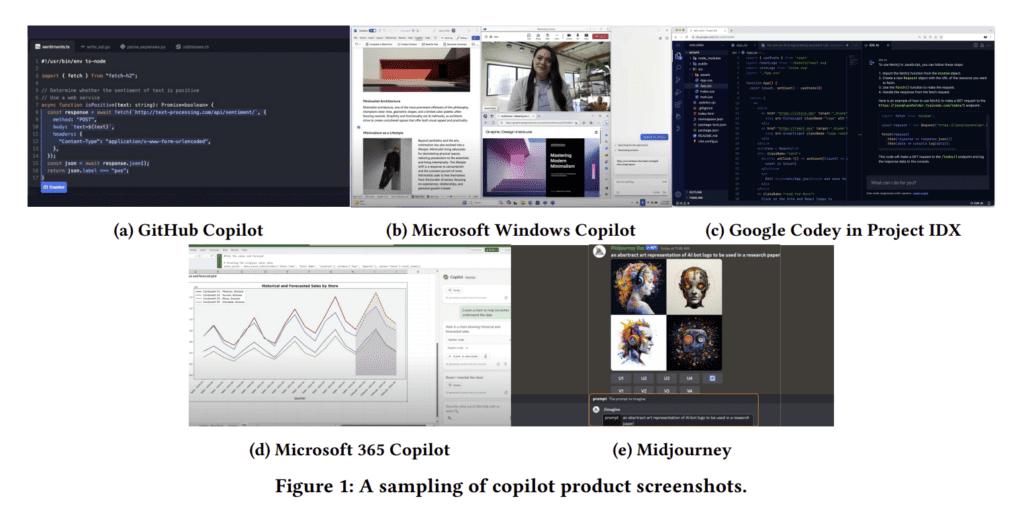

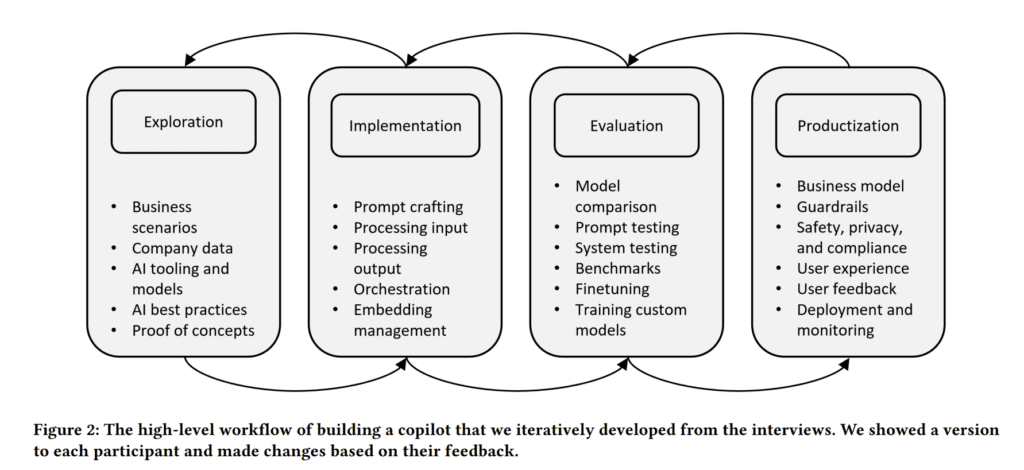

As artificial intelligence continues its steady march into mainstream software applications, many companies are racing to create their own “AI copilots” – intelligent assistants that can understand natural language requests and help users accomplish tasks. However, this new frontier of AI-powered software development comes loaded with obstacles, according to a study by Microsoft researchers which arrived in pre-publication status last week.

The paper reveals the steep learning curve faced by software engineers tasked with integrating large language models into commercial applications. While the raw AI capabilities seem magical, putting that research into practice involves dealing with fragile systems, intricate testing requirements, and a lack of standards or best practices.

“This is an exploratory period for the new field of AI Engineering,” explains lead author Chris Parnin, a senior researcher at Microsoft. “Our participants are on the ground trying to figure out how to take cutting-edge AI research and turn it into reliable and useful software.”

Table of contents

The Art and Science of Prompt Engineering

Central to building any AI copilot is the process of “prompt engineering” – using carefully constructed text prompts to make a large language model understand a user’s goal and provide a relevant, actionable response. For instance, an AI assistant meant to help developers write code would need prompts that can translate natural language requests into usable code snippets.

But as Parnin and his colleagues discovered, designing functional prompts involves far more guesswork than guidelines. “It’s more of an art than a science,” noted one survey participant. “The ecosystem is evolving quickly and moving so fast,” said another. Engineers found themselves stuck in time-consuming cycles of trial-and-error, struggling to wrangle inconsistent model behaviors using makeshift methods.

Once reasonably effective prompts were created, new issues emerged around version control and testing. Companies soon amassed large, fast-changing libraries of prompt components, but lacked established software engineering practices to manage them.

The Hidden Toil of Orchestration

Beyond individual prompts, many AI copilots rely on orchestrating complex chains of prompts and model interactions. This helps handle multi-step tasks, translate between input commands and system actions, and integrate real-time context about users and applications.

However, researchers discovered this orchestration layer breeds its own set of headaches. Engineers grappled to build reliable workflows using agent-based approaches, provide transparency into model reasoning, set safe boundaries around potentially harmful model behaviors, and carry user conversations across multiple turns.

“The behavior is really hard to manage and steer,” explained one survey participant. The data highlights AI copilots’ proclivity for getting stuck in loops or steering conversations completely off course.

Testing Troubles

Traditional testing methods also proved ill-suited for AI-powered systems that behave unpredictably by design. Attempts to write test cases with fixed assertions rarely worked, since language models generate new, varied responses each time. One approach involved simply running each test 10 times and passing if 7 outputs were valid – a brute force approach.

Engineers leaned more heavily into human evaluation and “metamorphic testing,” which focuses on structural changes rather than scrutinizing every output. But best practices remain undefined, and resources for manual reviewing at scale are scarce. Companies have not yet solved challenges like developing representative benchmarks and setting adequate test coverage thresholds.

“The hard parts are testing and benchmarks, especially for more qualitative output,” said one participant. “Humans will always have to be in the loop.”

An Urgent Need for Tools and Training

Across all areas, the lack of standards, centralized knowledge and tailored tools ranked among engineers’ greatest obstacles. Most had to forge their own learning paths across scattered online resources and nascent communities of practice. Beginners must throw away engrained software methods and reimagine development fundamentals like testing.

“Someone coming into it needs to come in with an open mind,” a survey respondent advised. “The idea of testing is not what you thought it was.”

Ultimately, participants yearned for clearer guardrails around issues of responsible AI development like safety, security, privacy, and algorithmic bias. They also called for more integrated toolchains purpose-built for AI software lifecycles – spanning prompt authoring, orchestration, monitoring, evaluation, and benchmarking.

An Engineering Discipline Taking Shape

While the research illustrates AI Engineering’s uncertain terrain, it also charts the rise of a new software methodology. Tools like prompt chaining interfaces, automated benchmark generators and model monitoring systems are beginning to emerge from both startups and tech giants. Online forums teem with advice on prompt strategies, toolkit combinations and hands-on lessons learned.

In time, these individual efforts may coalesce into defined procedures, formal educational programs, and an extensive toolkit ecosystem – much like earlier shifts that established client/server programming, mobile development and devops as formal disciplines.

For now, much pioneering work remains to tame AI’s wild promises into domesticated innovations. But the travails chronicled in this paper are a necessary step along the technology’s monumental march into the mainstream. The researchers aim to spur more studies and tools that can ease future AI builders’ roads.

“This serves as a foundation for guiding the way toward a more streamlined and efficient future for AI-first software development,” Parnin concludes.