Anthropic’s Model Context Protocol: Building an ‘ODBC for AI’ in an Accelerating Market

Anthropic has recently announced the Model Context Protocol (MCP), an open-source initiative aimed at simplifying and standardizing interactions between AI models and external systems. This effort is reminiscent of the role that ODBC (Open Database Connectivity) played for databases in the 1990s: making connectivity simpler and more consistent. However, MCP tackles an even broader scope, addressing a far more complex ecosystem, and it’s poised to become a foundational tool for AI model integration if it gains traction. In this analysis, we explore MCP’s potential, the challenges it faces, and the opportunities it offers to enterprise developers.

Table of contents

- Understanding MCP: Tackling the Complexity of AI Integration

- Technical Architecture: Security First, Scalability Next

- Market Dynamics: Betting on Developer Experience Over Performance

- Integration Challenges: Bridging AI and Enterprise Systems

- Opportunities and Risks: Charting MCP’s Path Forward

- Recommendations for Enterprise Teams

- The Road Ahead: MCP’s Future in AI Integration

Understanding MCP: Tackling the Complexity of AI Integration

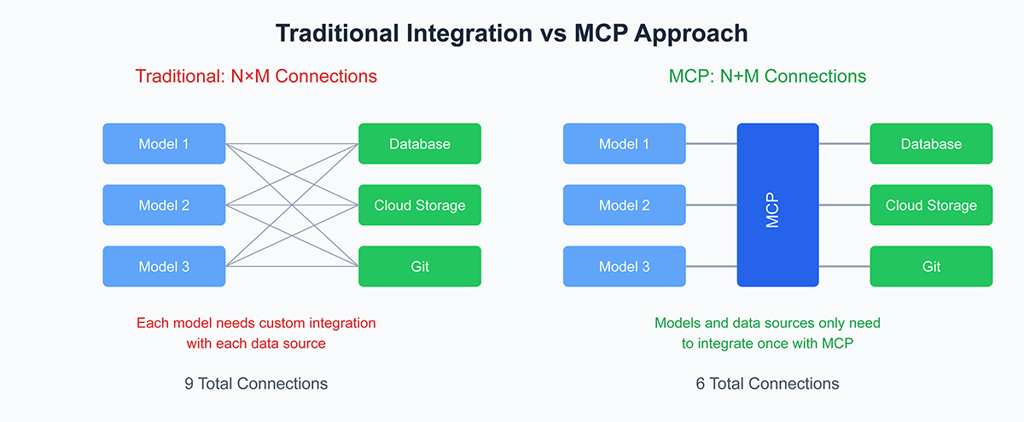

MCP is designed to solve a fundamental problem in enterprise AI adoption: the N×M integration issue—the challenge of connecting a multitude of AI applications with a wide variety of tools and data sources, each requiring custom integration. While ODBC standardized the way databases connected, MCP seeks to do the same for AI models, providing a consistent way for AI to interact with diverse environments like local file systems, cloud services, collaboration platforms, and enterprise applications.

The protocol aims to eliminate the need for developers to write redundant custom integration code every time they need to link a new tool or data source to an AI system. Instead, MCP provides a unified method for all these connections, allowing developers to spend more time building features and less time on integration. To illustrate MCP’s broad utility, imagine AI models needing to interact with different types of data: from PostgreSQL databases to cloud platforms like Google Drive or Slack. MCP’s goal is to streamline these interactions, reducing the manual coding that’s currently necessary.

Technical Architecture: Security First, Scalability Next

MCP uses a client-server architecture with an emphasis on local-first connections. This choice reflects current concerns around privacy and security, especially as AI systems increasingly access sensitive data. By requiring explicit permissions per tool and per interaction, Anthropic ensures that developers maintain tight control over what data models can access. This local-first approach is ideal for small-scale, desktop-focused environments, making it easier for developers to experiment without major security concerns.

However, this focus on local connections does create potential barriers for enterprise deployment. The need for scalability and distributed capabilities means that deploying MCP in cloud-native environments may be complex, especially where high-throughput operations are necessary. While Anthropic’s engineering team is actively working on extending MCP to support remote connections, this adds layers of complexity to security, deployment, and authentication.

For now, MCP is best viewed as a prototyping tool—an experimental framework for developers to test out integrations and build local, small-scale solutions. As it evolves, the community’s involvement will play a key role in MCP’s development, particularly in shaping it into a production-ready tool that can handle enterprise-grade scalability.

Market Dynamics: Betting on Developer Experience Over Performance

The AI industry is currently characterized by rapid evolution, with new features and capabilities emerging almost weekly. In this dynamic environment, Anthropic’s strategy for MCP stands out by focusing on enhancing the developer experience rather than trying to directly compete on model performance. Unlike OpenAI’s GPT-4o or Google’s Gemini, which are pushing the boundaries of raw performance, MCP aims to simplify the developer’s workflow and make integration with existing systems as seamless as possible.

Early adopters, such as Sourcegraph Cody and the Zed editor, have found value in MCP’s approach. Their feedback indicates that MCP’s promise of standardizing integration resonates, especially as enterprises look to bring AI tools into production with minimal overhead. However, the path to success requires more than just a handful of early adopters. To reach its potential, MCP needs major support from cloud providers like AWS, Azure, and Google Cloud. Without their buy-in, MCP risks becoming another niche tool, potentially overshadowed by proprietary solutions that might emerge.

Technology history offers many examples of standards that either became ubiquitous or faded into obscurity. ODBC and the Language Server Protocol (LSP) are notable examples of successful standardization, but there are countless others that failed due to lack of adoption or industry consensus. Anthropic needs to secure broad enterprise interest and ensure that MCP gains critical mass before competing standards gain a foothold.

Integration Challenges: Bridging AI and Enterprise Systems

AI integration is a major bottleneck for enterprise adoption. Every new AI tool introduced into an organization typically requires a bespoke integration, often resulting in time-consuming development and expensive maintenance. MCP aims to streamline this by providing pre-built integrations for popular enterprise systems like GitHub, Google Drive, and PostgreSQL.

However, current feedback has highlighted that MCP’s documentation is often too focused on implementation details, which may make it challenging for teams to quickly grasp the protocol’s broader benefits. Providing clear, conceptual overviews, alongside practical examples and use cases, will be essential for driving adoption among developers and decision-makers who might be less technically inclined. Well-structured documentation is not just a technical necessity; it’s a strategic tool that will either drive or hinder adoption, depending on how effectively it is handled.

Opportunities and Risks: Charting MCP’s Path Forward

Anthropic has positioned MCP as an open standard rather than a proprietary solution, hoping to foster community-driven adoption and innovation. This approach encourages contributions from a diverse set of developers and companies, but it also opens up the possibility of fragmentation if competing standards emerge or if consensus cannot be reached on protocol updates.

Key considerations for MCP moving forward include:

- Enterprise-Grade Security: MCP must evolve its security model to accommodate high-stakes production deployments. This requires balancing tight data access controls with ease of deployment, ensuring that enterprises can integrate AI without adding significant friction.

- Major Player Adoption: The involvement of major cloud providers is crucial. MCP’s adoption by industry giants like AWS, Azure, or Google Cloud would serve as a major endorsement and potentially accelerate its adoption across the AI landscape.

- Scalable Deployment Patterns: MCP must develop deployment blueprints that are suited for enterprise needs. This means ensuring it can handle multi-user environments and distributed operations efficiently and securely.

Recommendations for Enterprise Teams

For enterprise teams considering MCP, the current iteration is best used as a prototyping tool. Its strong focus on local-first security and straightforward setup makes it an excellent option for experimenting with AI integration in a controlled environment. To get the most value from MCP:

- Begin with Prototyping: Utilize MCP to quickly create and test integrations in a small-scale setting, gaining experience with its capabilities and limitations.

- Monitor Industry Adoption: Pay attention to adoption patterns among major players and industry movements. Participation from cloud providers or other influential players will be a key indicator of MCP’s viability as a long-term solution.

- Engage in Community Development: Consider contributing to MCP’s evolution. Open standards grow stronger with community input, and enterprises have a unique opportunity to help shape a tool that may become foundational to the AI ecosystem.

The Road Ahead: MCP’s Future in AI Integration

The launch of MCP represents a significant step forward in the effort to simplify AI integration. Its emphasis on developer experience and streamlined connections over raw model performance positions it uniquely in a crowded AI landscape. The journey ahead for MCP depends on whether it can address its current limitations—particularly around scalability and documentation—and secure the support of major industry players.

For now, organizations interested in MCP should approach it as a development tool, keeping a close watch on its evolution. If Anthropic can overcome the challenges of enterprise readiness, governance, and scalability, MCP could indeed become the ‘ODBC for AI’—a critical infrastructure layer that makes AI integration more accessible and manageable for everyone.